Should You be Tracking NPS?

Sprig's guide to the much-debated customer loyalty metric

Much has been written about Net Promoter Score (NPS) over the years, some positive and others negative. Notwithstanding the criticism, many, if not most businesses today use NPS surveys to monitor their customer experience.

NPS can serve a strategic purpose in some circumstances and can be a valuable tool for getting an organization focused on the customer. However, as a single metric for understanding a company’s customer experience, NPS has some serious failings.

What is NPS?

Net Promoter Score is a metric that is intended to measure a product or service’s customer experience, which is based on the single question: “How likely are you to recommend [Company] to a friend or colleague?”

One of the unique aspects of the NPS question is that it uses an eleven-point scale, where 0 = Not at all likely to recommend and 10 = Extremely likely to recommend. In addition, NPS is calculated by taking the percentage of respondents who give a rating of 9 or 10 and subtracting the percentage who give a rating of 0 - 6. Those who give a rating of 7 or 8 are excluded from the calculation.

Why Do So Many Companies use NPS?

When NPS was first developed, it was innovative in its promise that the entire customer experience could be understood based on the answer to a single question, which any business could use. Its promise to be the only number you need enabled NPS to establish a foothold and it has since been adopted by companies large and small, including many software companies and startups.

- NPS is widely recognized by corporate leaders. Because NPS has become so widespread, it’s a number most executives are familiar with, and they're often eager to know how their company stacks up. Using NPS can make it easier to get leadership teams focused on customer experience metrics.

- NPS is benchmarkable. Whether through buying competitive data or a simple Google search, it’s fairly easy to get a gauge of how your company’s NPS compares to industry averages. This makes it easy to answer the inevitable question: “But is this score good?”

- NPS is easy. It's just one question, complete with its own magic formula, that literally any business can use with no customization. Add to that the slew of tools catering to companies wishing to measure NPS and you can get up and running pretty quickly.

These points paint a compelling picture of the ease of implementing NPS. What's conspicuously absent is any selling point about the ease of interpreting NPS results. There are several reasons for that.

Challenges with Measuring Customer Health Using NPS

Net Promoter Score’s limitations include both methodological failings and business-fit issues, all of which create practical problems that keep companies guessing about the needs of their customers and are unable to predict retention.

The NPS question is overly broad, making results "noisy."

One of the things that makes NPS most appealing, its broadly applicable question, is also one of its key failings. The question is so generic it leads survey-takers to consider factors external to their customer experience when answering, such as the economy, the time of year, or whether their own personal networks include people who might be interested in your product.

While many of these external factors are relevant to your overall business, they aren't relevant to what you're trying to measure: the experience your customers themselves are having with your product. This creates "noise" in the numbers, making shifts in the score difficult to interpret, especially during times of crisis or change (e.g., an economic recession). Further, it can lead to a disconnect between your NPS score and the business metrics it is intended to predict, like conversion and churn.

The NPS scale and calculation can make it a misleading indicator.

There is much research to show that the more points there are on a scale, the less consistency there is in the way individuals answer the question from one person to the next. One person's 9 is another person’s 8; one person’s 7 is another person’s 6.

Because the NPS calculation uses hard cutoffs between rating categories (0-6, 7-8, 9-10) and excludes some ratings altogether (7s and 8s), scores can fluctuate substantially from week to week or month to month based on essentially meaningless differences. Further, because there is no differentiation between a rating of 0 and a rating of 6, real progress in the customer experience can be masked.

The upshot of all this is that you may end up facing questions from executives about why NPS dropped by 8 points, or why it hasn’t moved at all, that you are unable to answer, and that may not have any underlying explanation at all.

NPS ignores critical parts of the customer journey

In order to meaningfully answer the NPS question, you must already be a user of the product or service under consideration, as someone cannot predict whether they would recommend a product until they know what it is. As a result, companies using NPS are only able to collect meaningful feedback from a portion of their relevant target audience.

For example, while new customers can technically answer an NPS question, they'll inevitably provide lower ratings during the onboarding period than later in the journey. This doesn't necessarily mean they aren't having a great experience though; rather, it's at least partially because they aren't familiar enough with your product yet to confidently put their reputations on the line by recommending it. NPS scores during onboarding aren't very meaningful.

NPS isn't really a measure of customer health

Finally, by asking about customers’ likelihood to recommend a product, NPS overlooks these users' own personal experiences with it, opening a large gap in companies’ understanding of their customers--the very thing they are trying to measure.

One might argue that someone is unlikely to recommend a product they themselves are not happy with; that may be the case for most, but it certainly isn’t a universal truth. And if we’re making excuses for NPS already, does it seem like a good idea to use this single question as the basis for our understanding of the customer experience?

At the very least, the question we ask should be a question we want to be answered. For companies primarily focused on acquisition, especially when word-of-mouth is a major focus, NPS is fine. But for those wanting to predict retention or understand the experience at specific stages of the customer journey, it would be much more effective to ask questions focused on those things.

How to Move Beyond NPS?

If you've decided you're ready to move beyond NPS and start implementing new metrics, whether alongside NPS or as a replacement, there are a few simple guidelines to follow. These rules will help you ensure you're addressing the limitations of NPS and deepening your understanding of customer needs. Feel free to use the best practice examples below exactly as they are, or simply as inspiration for your own metrics.

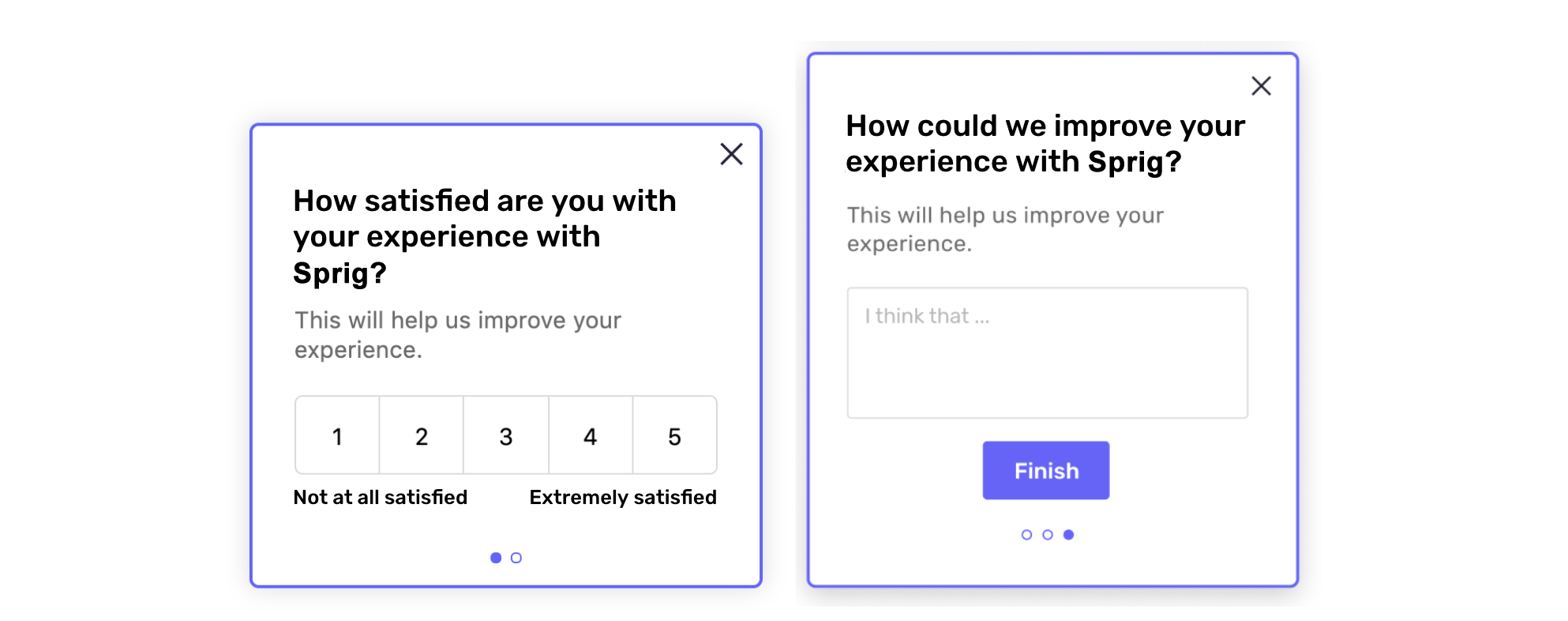

- Focus on the experience of your users. To avoid the influence of external noise while also increasing the actionability of open-ended feedback, make sure your question is focused squarely on users' own experiences with your product. This doesn't have to be complicated; you can simply ask them how satisfied they are with your product, how they would rate their experience with it, or how well it meets their needs. Pretty easy, right?

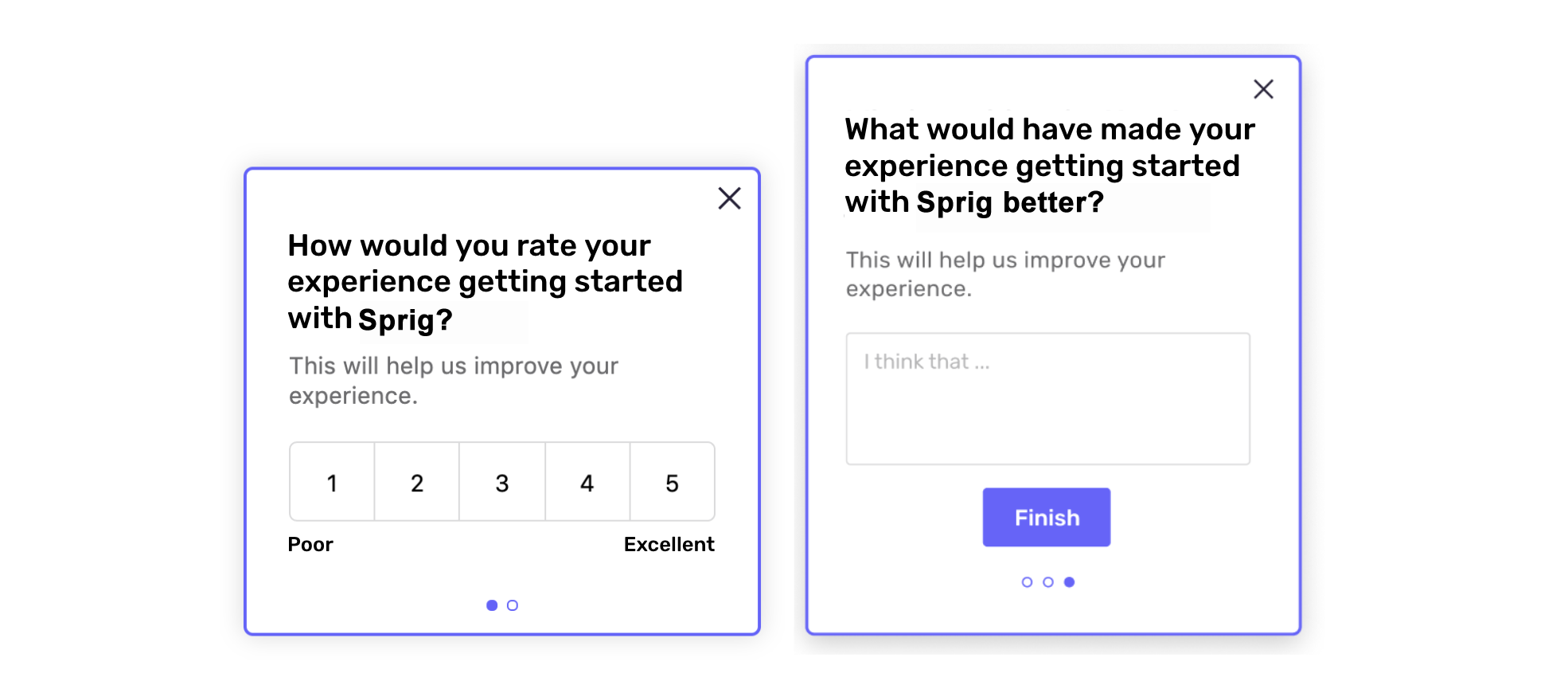

- Make it relevant to users' stage of the journey. It's important to understand the customer experience across all stages of the journey but to do so effectively, you must ask questions that are focused on those specific stages. For example, to understand the onboarding experience, you may want to ask users about their experience getting started with your product, rather than their experience in general; similarly, if you offer a paid subscription, you may want to ask paying users about their satisfaction with their subscription rather than their satisfaction in general. Small tweaks that tailor question wording to specific points in the journey will lead to more valid and reliable ratings and generate more specific feedback.

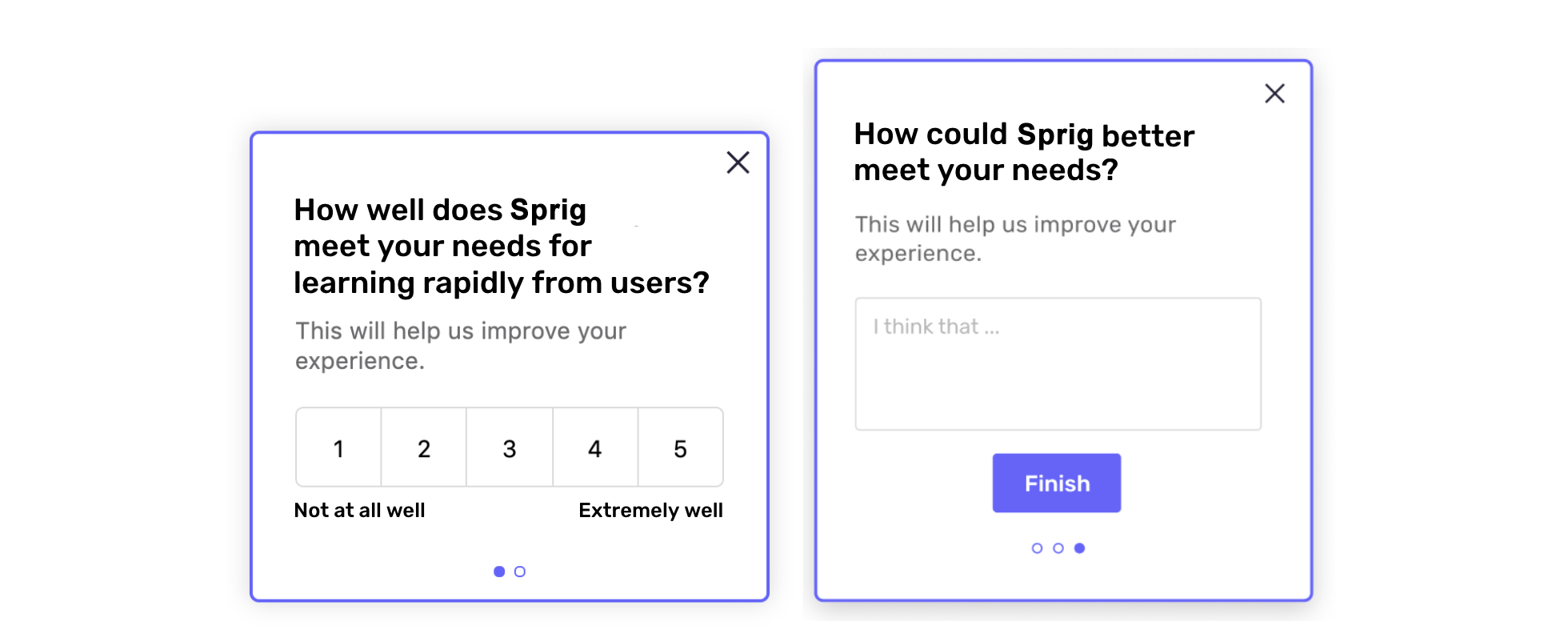

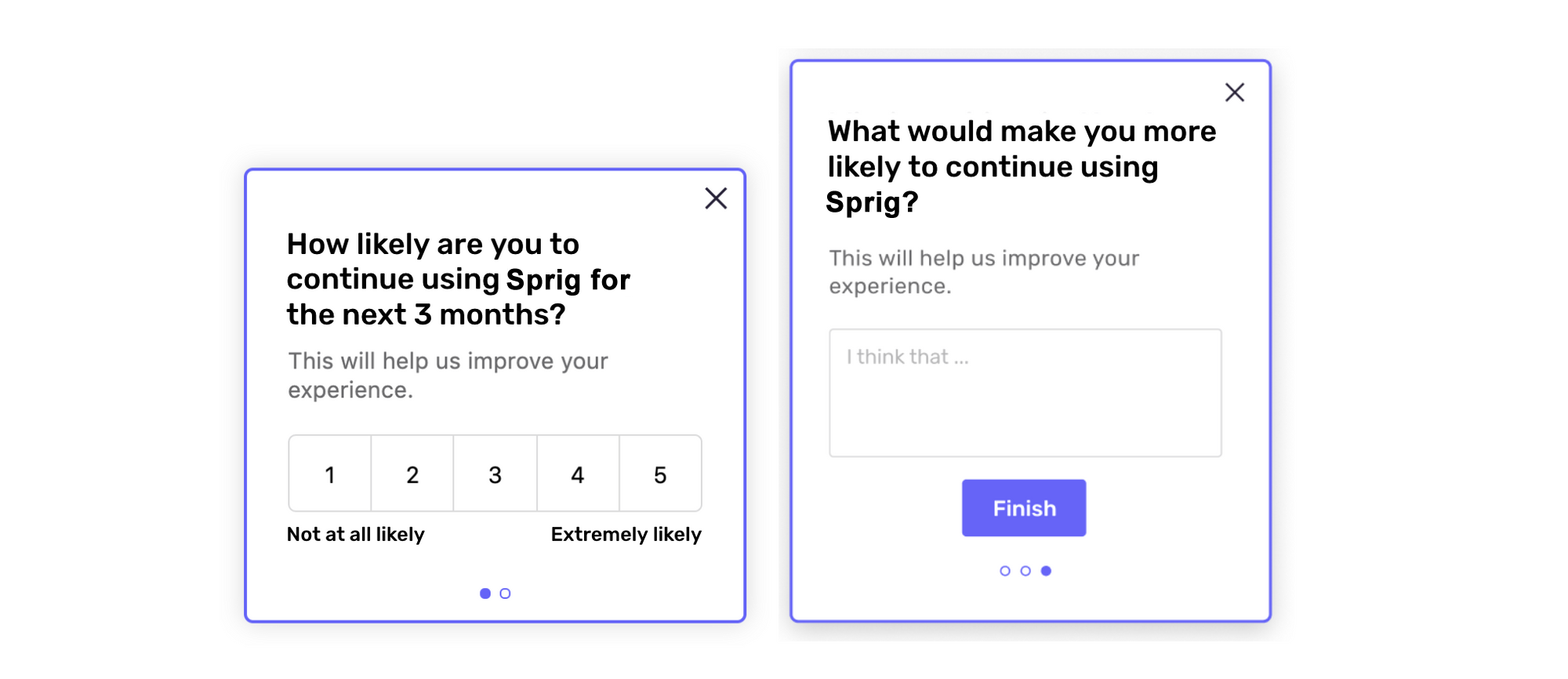

- Make it relevant to your business goals. Consider what you are trying to learn through your customer experience metrics, then ensure the questions you ask will give you those answers. If you want to understand the user experience with your product, ask about the experience with your product; if you want to understand users' loyalty or identify retention risk, ask users how likely they are to continue using your product; if you'd like to understand how well you're delivering on your overall value proposition, ask about that; and yes, if you'd like to understand word-of-mouth recommendation, then the NPS question works fine.

- Calculate the score from a simple average. Finally, don't get fancy with your calculation. A magic formula only adds complication, so stick with a simple average that everyone can understand. This is also the best way to capture changes holistically across both the low and high ends of the scale.

What to do if you’re already using NPS?

You’ll probably need to continue measuring NPS in the short term to maintain consistency of tracking, but you should take additional steps to close gaps in your understanding of customers. Start by tracking new metrics alongside NPS that meet the criteria outlined above. Consider piloting a handful of questions, reviewing trends and insights for a few months, and sticking with the ones that work well for your business. Then gradually shift your focus away from NPS and toward other, more useful metrics.

In addition, if volatility in NPS scores has been an issue, one way to make NPS more stable is by recalculating the scores using a simple average. You’ll see similar overall trends but with fewer large fluctuations, and you'll have a clearer understanding of what's happening with the ratings.

What to do if you’re considering using NPS?

The ease of getting started with NPS can make it appealing, especially to companies who have never measured their customer experience before; however, the inevitable headaches involved in interpreting and acting on NPS data should not be dismissed. Ideally, you start from scratch with more effective metrics, such as the examples shared above; however, if you do decide to use NPS, don't use it alone. Choose at least a few other metrics to track alongside it. That way, if and when NPS starts giving you those headaches, you can move away from it and rely on the other metrics you're already tracking.

Updated about 3 years ago