Question Types

Learn about the question types available in Sprig.

Supported Question Types

| Question Type | Description | Study Type | Delivery Method | Availability | Survey Format |

|---|---|---|---|---|---|

| Rating Scale | Also known as a Likert scale. A rating scale to measure respondents' attitudes toward a statement. | All (In-Product Surveys, Feedback, Long-Form Surveys, Prototype Tests) | All (Web, Mobile, Shareable Link) | All Plans | Both (Conversational and Standard) |

| Open Text | Open text response to a text question. The themes analysis is performed on the response to this question type. | All | All | All Plans | Both |

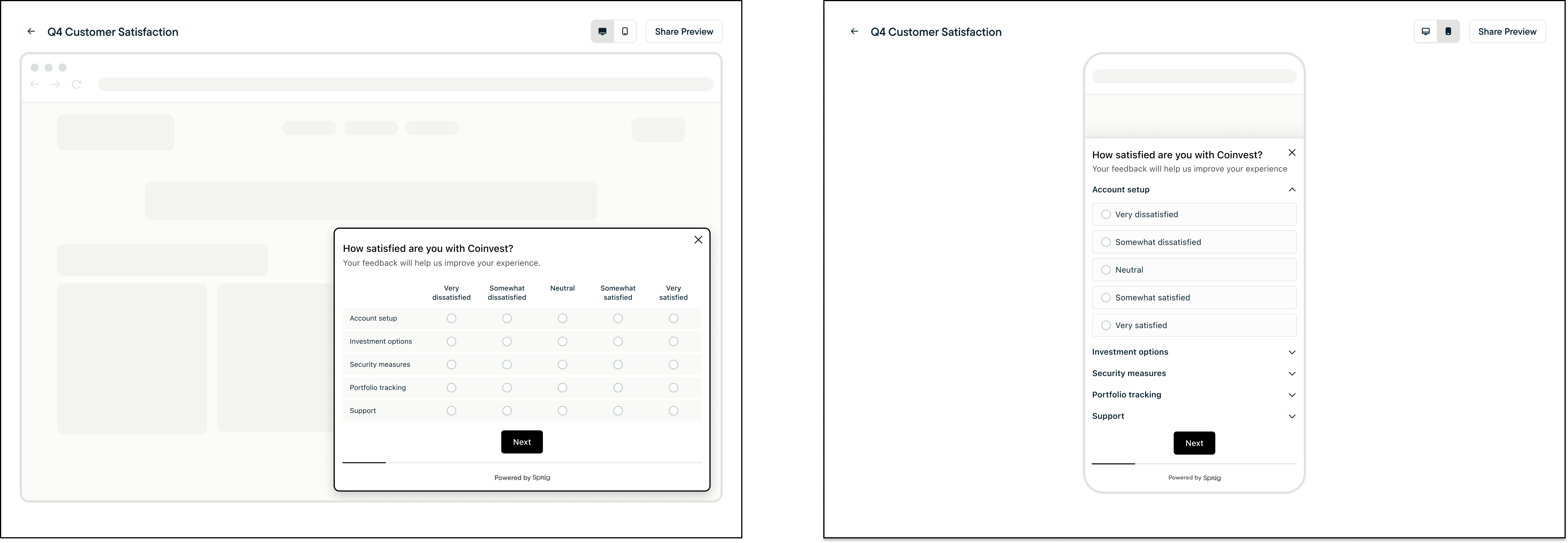

| Matrix / Accordion | Allows you to collect many responses for similar question sets. | All | All | All Plans | Both |

| Multiple Choice Single-Select | For example, What color is your hair? Possible answers may be Black, Brown, Blue, Green, Gray, Unknown/Other. | All | All | All Plans | Both |

| Multiple Choice Multi-Select | For example, Select all the fruit you like? Possible answers may be Orange, Apple, Banana, Pear, Peach, Grapefruit. | All | All | All Plans | Both |

| NPS | For example, How likely are you to recommend Sprig to a friend or colleague? Answer on a scale from 0 to 10. | All | All | All Plans | Both |

| Consent / Legal | Enable respondents to consent to disclaimers before conducting the study. | Surveys | All | All Plans | Both |

| Text / URL prompt | Used to introduce or conclude a study, or permit the study respondent to navigate to a URL. | All | All | All Plans | Both |

| Video and Voice | Enable respondents to provide video and audio-only questions and responses. | Surveys and Prototype Tests | All | All Plans | Both |

| Rank Order | Enable respondents to prioritize a set of options so you can make data-driven decisions. | Surveys | All | Enterprise Plans Only | Both |

| Recorded Task | Ask respondents to complete a task through a prototype test and enable screen capture and respondent video and voice responses. | Prototype Tests | Link | Enterprise Plans Only | Both |

| MaxDiff | Gather granular preference information on longer lists of items. | Long-Form Surveys | Link | Enterprise Plans Only | Standard only |

| Multi-Question: Single Page | Add a page that can contain multiple questions. | Long-Form Surveys | Link | Starter and Enterprise | Both |

Note: Video and Voice and Recorded Task questions are not supported in HIPAA compliant environments.

Question Types

Rating Scale

A Rating or Likert scale is a type of scale used in survey research that measures respondents’ attitudes towards a certain subject. You can change the Range or number of points; the Labels, numeric or a star of smiley emoji; and the Lowest and Highest Value Labels

Skip Logic supported for Rating Scale Question Type

- is equal to

- is not equal to

- is less than

- is less than or equal to

- is greater than

- is greater than or equal to

- is submitted*

- is skipped%

*is submitted, accepted when the respondent selects any value.\ %is skipped, accepted when the visitor does not respond to a specific question. Available for Video & Voice, Open Text, and Multiple Choice Multi-Select questions

Open Text

Open-Ended questions contain a text field in which respondents can compose their own answers. This qualitative data is sometimes harder to analyze than quantitative data. However, the Open Text question employs Sprig's Thematic Clustering service to automate the analysis stage.

Skip Logic Supported for Open Text Question Type

- is submitted*

- is skipped%

- contains

- does not contains

*Is submitted is accepted when any value is selected by the respondent.\ % is accepted when the respondent provides no answer.

Matrix / Accordion

There are minimum SDK version requirements to support all Matrix Question functionality.

Platform | min SDK Version |

|---|---|

Web | 2.25.1 (Desktop Matrix), 2.32.0 (Display as Accordion on Desktop) |

iOS | 4.22.3 |

Android | 2.16.4 |

React Native | TBD |

Display Behavior

- Desktop Web: Defaults as full scale matrix, Option to Always display as accordion on desktop

- Mobile Web: Always as accordion (full scale matrix not supported)

- Mobile Apps: Always as accordion (full scale matrix not supported)

Skip Logic Supported for Matrix Question Type

- is completely submitted*

- is only partially submitted*

*Is submitted is accepted when any value is selected by the respondent.\ % is accepted when the respondent provides no answer.

Multiple Choice Single-Select

The multiple choice single-select question type allows a respondent to choose only one option from a list of possible answers.

Skip Logic Supported for Multiple Choice Single-Select Question Type

- includes at least one

- does not include

- is submitted*

*is submitted: is accepted when the respondent selects any value.

Randomized Order Supported

The order of responses shown to the user can be randomized using the Randomize Order dropdown menu. Additionally, users can configure choices to be pinned to the bottom of the list of responses by adding a Pinned Choice, even alongside an Other choice.

Display as Dropdown

You can choose to display MC as a dropdown instead of a list by toggling Show choices in a dropdown ON

Multiple Choice Multi-Select

The multiple choice multi-select select question type allows a respondent to choose only multiple options from a list of possible answers.

Skip Logic Supported for Multiple Choice Multi-Select Question Type

In the following example, the skip logic is configured so that the user is taken to an open-ended question if Other is selected. If any of the options are selected the user is taken to the end of the survey.

- is exactly: evaluates to TRUE when respondent selects the EXACT options specified.

| Rule | Options | Result |

|---|---|---|

| Exactly [a, b] | [a, b] | true |

| Exactly [a, b] | [a] | false |

| Exactly [a, b] | [a, b, c] | false |

- includes all: evaluates to TRUE when respondents select ALL that are included within specified options.

| Rule | Options | Result |

|---|---|---|

| Includes all [a, b] | [a, b] | true |

| Includes all [a, b] | [a] | false |

| Includes all [a, b] | [a, b, c] | true |

- includes at least one: evaluates to TRUE when respondents select one or more options specified.

| Rule | Options | Result |

|---|---|---|

| Includes at least one [a, b] | [a] | true |

| Includes at least one [a, b] | [a, b] | true |

| Includes at least one [a, b] | [a, b, c] | true |

| Includes at least one [a, b] | [c] | false |

- does not include: evaluates to TRUE when respondents select one or more options specified.

| Rule | Options | Result |

|---|---|---|

| Does not include [a, b] | [c] | true |

| Does not include [a, b] | [c, d] | true |

| Does not include [a, b] | [a] | false |

| Does not include [a, b] | [a, b] | false |

If multiple skip logic statements are true, visitors will be routed to a randomly selected true logic statementFor example:

- includes at least one a or b = skip to 2

- includes all a and b = skip to 3

If the user selects a and b, they would be routed to a randomly selected TRUE logic statement. In the example above, a visitor would have an equal chance of landing on question 2 or 3.

Response Validation Options (also known as "Max Selectable")

There are three options for constraining responses to Multiple Choice Multi-Select questions:

- Unlimited (default) - Respondents can select as many choices as they'd like.

- Maximum - Set a maximum for the number of choices respondents can select.

- Once the maximum has been reached, remaining choices will become un-selectable.

- Range - Define a minimum and maximum number of choices that respondents can select.

- The lowest a minimum can be is 1, the maximum can be up to the total number of choices in the list.

- If Range is selected and the question is optional, respondents can skip the question entirely but if they start answering it they must meet the minimum in order to move forward in the survey.

- Respondents will get a numeric indicator in the UI to know how many options they need to select to meet the minimum. The indicator will disappear once it's been met.

Best practice: describe the validation requirements in the question or description so respondents know what's expected.

If Range or Maximum are selected for the question, a "None of the above" option cannot be added to the question. Similarly, if "None of the above" is already one of the options, you cannot change the Selection Amount to Range or Maximum. Remove the "None of the above" option to change the validation type.

Randomized Order Supported

The order of responses shown to the user can be randomized using the Randomize Order dropdown menu. Additionally, users can configure choices to be pinned to the bottom of the list of responses by adding a Pinned Choice, even alongside an Other choice.

None of the Above Choice

For Multiple Choice Multi-Select questions, a None of the Above choice can be added to the list of options. When selected by a respondent, other options are automatically deselected.

Display as Dropdown

You can choose to display Multiple Choice Multi-Select questions with a dropdown instead of a list by toggling the Show choices in a dropdown setting ON.

Net Promoter Score

Net promoter score (NPS) is a widely used market research metric that typically takes the form of a single survey question asking respondents to rate the likelihood that they would recommend a company, product, or service to a friend or colleague. NPS is a commonly requested sentiment score that investors and boards review across companies, segments and verticals. For more information on NPS, click here.

Skip Logic Supported for NPS Question Type

- is equal to

- is not equal to

- is less than

- is less than or equal to

- is greater than

- is greater than or equal to

- is submitted*

*is submitted is accepted when any value is selected by the respondent.

Text / URL Prompt

The Text / URL prompt is used to introduce or conclude a study, or permit the study respondent to navigate to a URL. It comprises two text fields and a button that can go to the next question or a URL.

The steps to create a study with a Text / URL prompt are:

- Select Text / URL Prompt from the Add Question menu.

- In the Headline field, type in the purpose of the Text / URL prompt.

- Type in your question or statement in the Body field. The Body field also supports text formatting, allowing you to customize your study further. You can change the character style (bold, italicized, and underlined), create lists, and add links. This feature is not supported on iOS devices or versions of the Web SDK before v2.14.9.

- Select when the respondent clicks the button whether you want the navigation to continue in the survey or link to an external URL.

- Type in the text for the button

- If you selected Button to link to external URL, type in the URL you want the respondent to navigate to.

Recruiting Participants also describes a use case where the Text / URL prompt is employed.

InfoYou may want to send information about study respondents through the external link to identify them. If you select Button to link to external URL, you can identify the visitor through the URL.

Identifying the Visitor

If you have configured the Button to link to external URL, you can send information about study respondents through the external link to identify them. For example, with the URL placeholder {{email}}, the Visitor's email will be substituted and sent to the destination website. For Web, Link, and Android studies, the following parameters are supported:

| Placeholder | Description |

|---|---|

{{email}} | Visitor's email address |

{{user_id}} | Visitor's user ID |

For example, if you had specified a Button URL of:

https://website.com/schedule?id={{user_id}}&email={{email}}

and a visitor has the user ID of 23481 and the email [email protected] set,

when the user clicks on the button, they will be sent to the URL:

https://website.com/schedule?id=23481&[email protected]

You can also link to another Sprig study. For example, to associate both studies with the same user, you may want to send the user ID to a different study from the original study. In this case, the Button URL would be:

https://a.sprig.com/abcd-study-link-wxyz?user_id={{user_id}}

The exact format for the user ID and email name-value pairs may vary by destination site.

If the value for a parameter is not available for the visitor taking the study, that parameter will be removed. For example, if only the user ID is present, the Button URL:

https://website.com/schedule?id={{user_id}}&email={{email}} would be sent as:

https://website.com/schedule?id=23481

If neither is present, it would be just:

https://website.com/schedule.

In addition, there are specific considerations for Link, Web, and Android:

Link Platform Studies

To make user ID and email available to be replaced in button URLs in link studies, include them as part of the study URL. Add them as query parameters, with the key user_id for user ID and email for email. For example, for a study with the link https://a.sprig.com/abcd-study-link-wxyz, user ID 23841, and email [email protected], format the link as https://a.sprig.com/abcd-study-link-wxyz?user_id=23841&[email protected], with a ? before the parameters and & between them. See here for details on how to configure this in your 3rd-party marketing platform.

Web Studies

To make user ID and email available to be replaced in button URLs in Web studies, ensure they're set on the user before the study is displayed. See Web: Identify Users documentation. User ID is persisted in browser local storage, while email is not and should be set again with each session. Your Web SDK version must be at least v2.15.3.

Android Studies

Similar to Web, to make user ID and email available to be replaced in button URLs in Android studies, ensure they're set on the user before the study is displayed. See Android: Identifying Users documentation. User ID is persistent locally, while email is not and should be set again with each session. Your Android SDK version must be at least v2.6.0.

Consent / Legal

You can incorporate a Non Disclosure Agreement into your Study for your participant's review and obtain their consent before proceeding with the study. The copy can be in text format and/or a PDF file. You can also capture the respondent's name.

Video and Voice

As well as Open Text questions and responses, Sprig also supports video and audio-only questions and responses. The video and voice responses are automatically transcribed and optionally translated. Contact Sprig Support to enable the translation service. The transcription enables the same AI analysis of textual responses to be conducted on video responses. However, video and audio responses provide an additional emotional dimension that is not always visible in text-only responses. Usually, there is a lower response rate to voice and video questions due to the higher effort required by the study recipient than other study methods. Consider focusing voice and video questions on a specific area of interest and only share that study with recipients you think the topic would be particularly relevant.

The steps to create a study with video-enabled questions and responses are:

- Select Video & Voice from the +Add Question menu.

- Click Request Permissions to enable Sprig to request access to your camera and microphone.

- Click Allow for the browser to permit Sprig access to your camera and microphone.

- Click

Record to begin the recording countdown and will automatically begin recording for you to record your question. Optionally, click

Record to begin the recording countdown and will automatically begin recording for you to record your question. Optionally, click  Video to turn off video and record the audio only.

Video to turn off video and record the audio only. - Click

to Stop recording.

to Stop recording. - Click

to Play back your recording.

to Play back your recording. - If you are not happy with the recording, click

to Delete your recording and start a new recording.

to Delete your recording and start a new recording. - When you are happy with the recording or do not need to add any more questions, click Audience to proceed with configuring the study.

- In the Design Tab, a preview of the survey is displayed on the platform you have selected.

- When satisfied, return to the app.sprig.com browser tab and click Launch Survey.

- Distribute the survey link to prospective respondents using your usual methods.

- Note that all respondents have to give permission in their browser to enable camera and microphone access.

Skip Logic Supported for Video & Voice Question Type:

- is submitted*

- is skipped%

*Is submitted is accepted when any value is selected by the respondent.\ % is accepted when the respondent provides no answer.

Downloading a Video Response

- Click on the study from which you want to download a video response.

- Click on Responses.

- Click on Download CSV to download the responses CSV file.

- Open the CSV file in a spreadsheet.

- Locate the question and response you would like to download.

- Notice that the response cell has a field labeled downloadUrl.

- Copy that URL in your browser.

- Once the video loads, right-click on the video and select Save video as to download a copy of the video.

Content Security Policy

Supporting Video and Voice questions for Web studies may require you to update your web application's Content Security Policy (CSP). This may be required because Sprig uses third-party libraries to support Video & Voice questions, including fetching an external stream of the question and uploading the response.

The following list of sources is required to use Video & Voice questions:

- Base64 SVGs and fonts.

- Connecting to Mux (for streaming and short video uploads).

- Connecting to Google APIs (for long video uploads).

- A worker source required by our third-party library (videos).

Examples are shown in the following CSP source list:

<meta

http-equiv="Content-Security-Policy"

content="img-src data: https://*.mux.com;

connect-src blob: data: https://api.sprig.com https://*.mux.com https://storage.googleapis.com https://cdn.sprig.com https://cdn.userleap.com;

font-src data:;

media-src blob: https://*.mux.com;

worker-src blob:;"

/>

InfoAs every company has different security policies the example may not reflect the complete configuration. You may need to add additional keys and values in order to comply with your own organization’s policies.

Troubleshooting Errors

After creating your first study with Video and Voice questions, test its display on your website using these instructions. Check your browser console for any CSP-related errors if the questions are not rendering correctly or responses aren't uploaded. You may have to work with your engineering and security teams to update your website's or app's CSP settings to resolve the errors.

Rank Order

AvailabilityAs of Nov 1, 2025 Rank Order questions are only available on the Enterprise plan.

Capture relative preferences by having respondents prioritize a set of options. Supported in Long-Form and In-Product Surveys.

Configure

- Add 2 - 10 options and optionally set them to display in a random order

- Set the labels for the top and bottom of the list to help respondents understand the criteria they should be using the rank the options

Respondent Experience

Items are unranked until the respondent ranks their first item. As they drag and drop or set a specific ranking for an item, the items in the list will move to reflect the new order. Note that drag and drop is not yet supported when surveys are taken on Android devices.

Review results

Once you've collected results, view details about how each item was ranked in a histogram. Hover over the histogram to get a tool tip containing a breakdown with percentages.

MaxDiff

AvailabilityMaxDiff questions are only available on the Enterprise plan and for Long-Form Surveys.

MaxDiff questions, also known as Best-Worst Scaling or Maximum Difference Scaling, allow you to gather detailed preference information from respondents. They're often used when the goal is to deeply understand the importance of the items, like product improvements or brand messages, being rated.

Benefits

For researchers: mitigate position and order bias by showing items in different groupings and orders; gather deeper insights and detailed preference data.

For respondents: reduced cognitive load due to only needing to select the best and worst items from small set of items; a speedy survey-taking experience due to automatic advancement

Build

Building a MaxDiff is very similar to building any other question, with a few notable differences:

- The left label must be a positive descriptor, like "Best" or "Favorite" in order for the Best-Worst calculation Sprig does to work.

- The right label must be a negative descriptor, like "Worst" or "Dislike" in order for the Best-Worst calculation Sprig does to work.

- MaxDiffs require a minimum of 4 and a maximum of 24 items in the list to be rated.

Configure

Once you've added the questions, labels, and items, you can configure the experiment portion of the question. There are two options:

- Recommended - Sprig will use the number of items you've entered and the following formula to determine how to structure the question: (the number of items in the question / (half the items in the list up to a max of 8)) * 3

- For example, in a question that has 24 items the formula would be: (24/8)* 3 = 9 sets of 8 items to each respondent.

- Custom - Configure the number of items per set and the number of times each item should be shown to each respondent, within the ranges noted below the fields. Based on those values Sprig will calculate the number of sets to show each respondent.

Sprig's set generation is designed to:

- show items in different pairs evenly such that all pair comparisons are shown to each respondent or can be inferred

- ensure each item is shown an equal number of times with other items (so strawberries isn't always shown with kiwi and durian)

- show each item the same (minimum) number of times across all sets (some rounding occurs especially when there are an odd number of items or sets)

- show items in different positions within sets (top, bottom, middle)

- show items evenly across sets so they aren't shown only up front and not later

All sets a respondent will see are generated as the survey initially loads; the order of the items displayed will be "randomly" determined. Not all items will be shown to each respondent, and each respondent may not see each item the exact number of times the formula calls for, usually when there's an odd number of items and rounding occurs.

MaxDiff FAQs

- By default a MaxDiff question is required.

- After a survey is launched you can only edit the existing items in a MaxDiff question, you cannot add or remove items.

- Likewise, you cannot change the configuration of a MaxDiff once the survey has been launched.

- Skip Logic for MaxDiff questions is supported when the question is submitted. Support for when it's skipped is coming soon.

Respondent Experience

The experience for respondents was built to be easy to use. After the second selection is made for a set, the respondent will automatically be moved to the next set so they don't have to take action themselves.

When the MaxDiff is required - The a respondent must provide both a "best" and "worst" response for each set they are presented with before they can move to the next question in the survey.

When the MaxDiff is optional - The respondent can skip all sets by immediately clicking the forward button on the Long-Form Survey, if, however, they start to answer the MaxDiff, they must complete the set they're on before they can skip to the next question.

Results

Sprig records the "best" and "worst" selection for each set and the order of items show in each set.

The "best" percentage is the percentage of times that item was chosen as the best across all sets. The "worst" is the percentage of times the item was chosen as worst.

The score is based on the following formula and ranges 100 to -100:

(number of times marked best - number of times marked worst) / total number of appearances.

Results on the survey summary page are based on "completed" questions, where respondents filled in all of the sets they were shown. To view partial responses, download the CSV.

Updated 14 days ago